You know what sounds like a really bad idea?

Deleting 3,000 pages from the HubSpot Blog.

You know what our SEO and Web Dev teams did in February?

Deleted 3,000 pages from the HubSpot Blog.

No, this wasn't some Marie Kondo-ing team-bonding exercise gone horribly, horribly wrong (although those posts were definitely not sparking joy).

It was a project that our head of technical SEO, Victor Pan, and I had wanted to run for a long time -- because counterintuitively, getting rid of content on your site can actually be fantastic for SEO.

In the SEO world, this practice is called "content pruning". But, while a good idea in theory, content pruning doesn't mean you should go crazy and hack away at your content like it's a tree and you've got a chainsaw. Content pruning is far more methodical than that -- like trimming a bonsai.

I'll get to the results we saw at the end of this post. But first, let's explore what content pruning is, and then dive into a step-by-step content audit process, so you'll have a detailed blueprint of how to do this for your own property (or your client's).

Which brings us to the next question:

How often should you run a content audit?

Like almost everything in SEO, it depends. If you have a large site, you may want to audit a different section every month. If you have a small site, consider evaluating the entire site every six months.

I typically recommend starting with a quarterly audit to see how much value you receive from doing one. If you end up with so many next steps you feel overwhelmed, try running them more often. If you're not learning that much, run them less often.

Why run a content audit?

When my team kicked off this project, we already knew there were a ton of older pages on the HubSpot blog that were getting essentially zero traffic -- we just didn't know how many. Our goal from the start was pruning this content.

However, even if that wasn't the case, there are still several reasons to run periodic content audits:

- Identify content gaps: where are you missing content?

- Identify content cannibalization: where do you have too much content?

- Find outdated pages: do you still have legacy product pages? Landing pages with non-existent offers? Pages for events that happened several years ago? Blog posts with out-of-date facts or statistics?

- Find opportunities for historical optimization: are there any pages that are ranking well but could be ranking higher? What about pages that have decreased in rank?

- Learn what's working: what do your highest-traffic and/or highest-converting pages have in common?

- Fix your information architecture: is your site well-organized? Does the structure reflect the relative importance of pages? Is it easy for search engines to crawl?

Choosing your goal from the beginning is critical for a successful content audit, because it dictates the data you'll look at.

In this post, we'll cover content audits that'll help you prune low-performing content.

1. Define the scope of your audit.

First, determine the scope of your audit -- in other words, do you want to evaluate a specific set of pages on your site or the whole enchilada?

If this is your first time doing a content audit, consider starting with a subsection of your site (such as your blog, resource library, or product/service pages).

The process will be a lot less overwhelming if you choose a subsection first. Once you've gotten your sea legs, you can take on the entire thing.

2. Run a crawl using a website crawler.

Next, it's time to pull some data.

I used Screaming Frog's SEO Spider for this step. This is a fantastic tool for SEO specialists, so if you're on the fence, I'd go for it -- you'll definitely use the spider for other projects. And if you've got a small site, you can use the free version, which will crawl up to 500 URLs.

Ahrefs also offers a site audit (available for every tier), but I haven't used it, so I can't speak to its quality.

Additionally, Wildshark offers a completely free crawler that has a very beginner-friendly reputation (although it only works on Windows, so Mac users will need to look elsewhere).

Finally, if you want to run a one-time audit, check out Scrutiny for Mac. It's free for 30 days and will crawl an unlimited amount of URLs -- meaning it's perfect for trying before buying, or one-off projects.

Once you've picked your weapon of choice, enter the root domain, subdomain, or subfolder you selected in step one.

For instance, since I was auditing the HubSpot blog, I only wanted to look at URLs that began with "blog.hubspot.com". If I was auditing our product pages, I would've wanted to look at URLs that began with "www.hubspot.com/products".

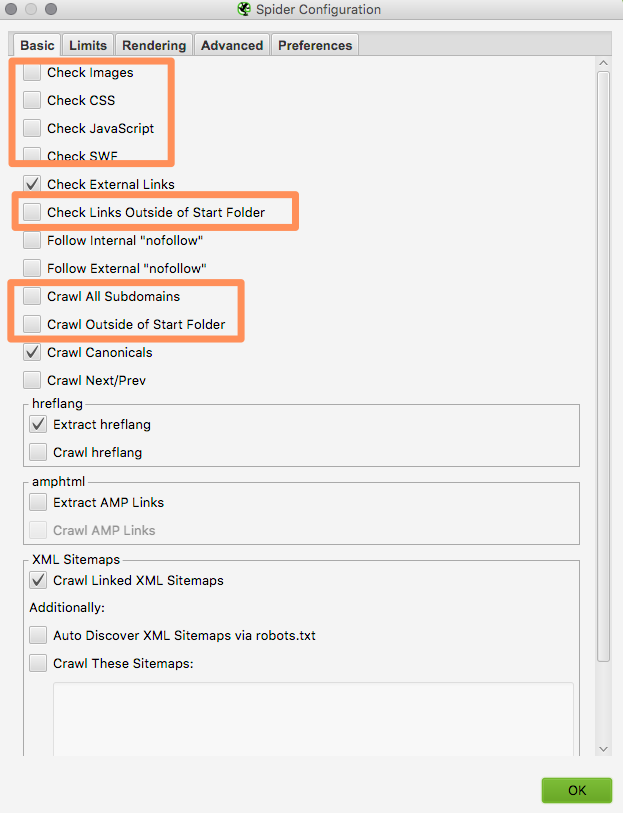

If you're using Screaming Frog, select Configuration > Spider. Then, deselect:

- Check Images

- Check CSS

- Check JavaScript

- Check Links Outside Folder

- Crawl All Subdomains

- Crawl Outside Folder

Next, tab over to "Limits" and make sure that "Limit Crawl Depth" isn't checked.

What if the pages you're investigating don't roll up to a single URL? You can always pull the data for your entire website and then filter out the irrelevant results.

After you've configured your crawl, hit "OK" and "Start".

The crawl will probably take some time, so meanwhile, let's get some traffic data from Google Analytics.

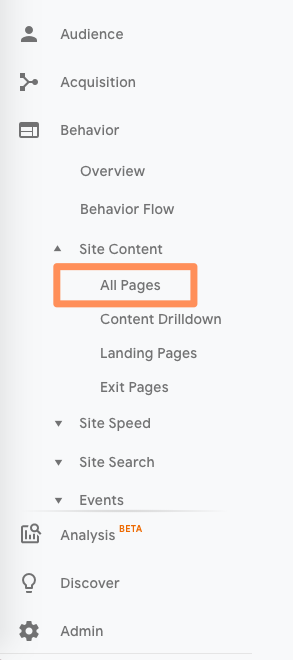

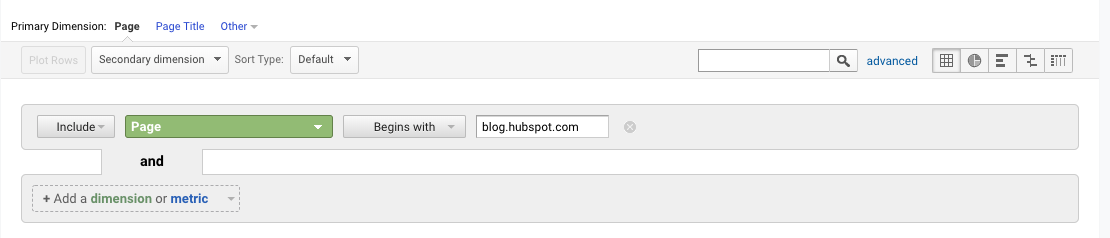

Since we're evaluating each page, we need the "Site Content > All Pages" report.

If you have a view set up for this section of the site, go to it now. I used the view for "blog.hubspot.com".

If you don't have a view, add a filter for pages beginning with [insert URL path here].

Adjust the date range to the last six to twelve months, depending on the last time you ran an audit.

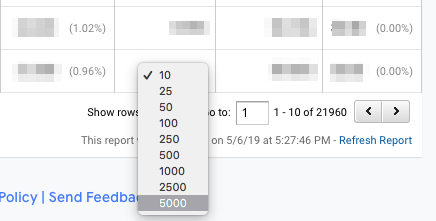

(Also, don't forget to scroll down and change "Show rows: 10" to "Show rows: 5000".)

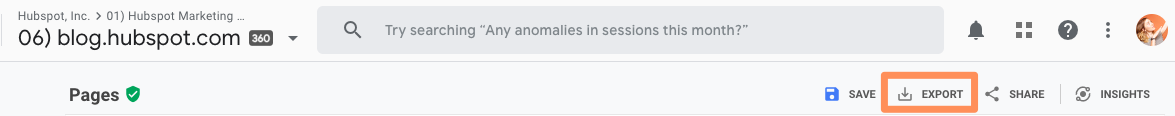

Then, export that data into a Google Sheet.

Title the sheet something like "Content Audit [Month Year] for [URL]". Name the tab "All Traffic [Date Range]".

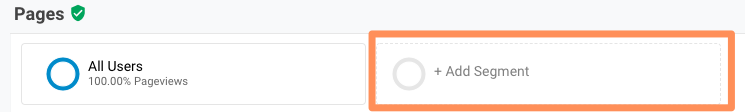

Then go back to GA, click "Add Segment", uncheck "All Users", and check "Organic Users". Keep everything else the same.

(It's a lot easier to pull two reports and combine them with a V-LOOKUP then add both segments to your report at once.)

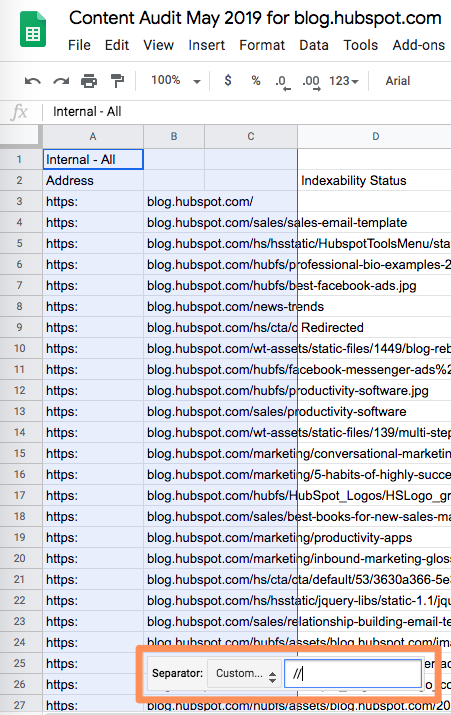

Once it's finished processing, click Export. Copy and paste the data into a new tab in original content audit spreadsheet named "Organic Traffic [Date Range]".

Here's what you should have:

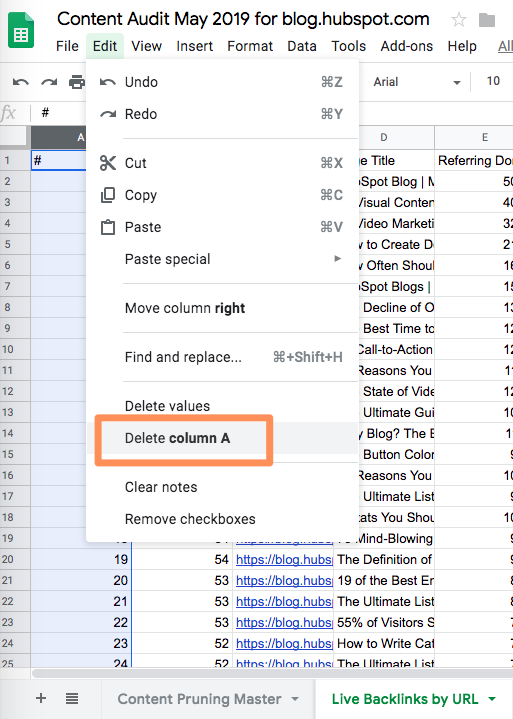

At this point, I copied the entire spreadsheet and named this copy "Raw Data: Content Audit May 2019 for blog.hubspot.com." This gave me the freedom to delete a bunch of columns without worrying that I'd need that data later.

Now that I had a backup version, I deleted columns B and D-H (Pageviews, Entrances, % Exit, and Page Value) on both sheets. Feel free to keep whatever columns you'd like; just make sure both sheets have the same ones.

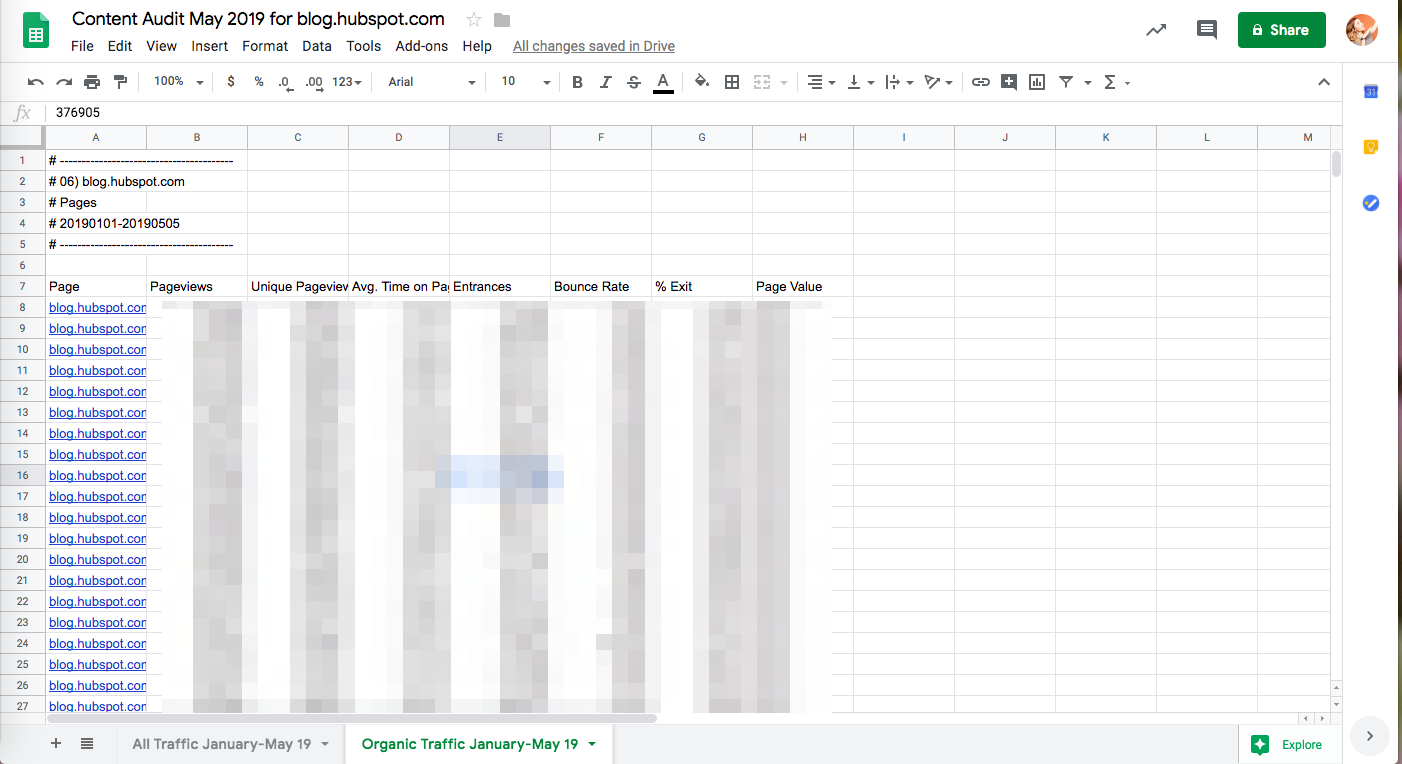

Hopefully, your Screaming Frog crawl is done by now. Click "Export" and download it as an CSV (not .xslx!) file.

Now, click "File > Import" and select your Screaming Frog file. Title it "Screaming Frog Crawl_[Date]". Then click the small downward arrow and select "Copy to > Existing spreadsheet".

Name the new sheet "Content Pruning Master". Add a filter to the top row.

Now we've got a raw version of this data and another version we can edit freely without worrying we'll accidentally delete information we'll want later.

Alright, let's take a breath. We've got a lot of data in this sheet -- and Google Sheets is probably letting you know it's tired by running slower than usual.

I deleted a bunch of columns to help Sheets recover, specifically:

- Content

- Status

- Title 1 Length

- Title 1 Pixel Width

- Meta Description 1

- Meta Description 1 Pixel Width

- Meta Keyword 1

- Meta Keywords 1 Length

- H1-1

- H1-1 Length

- H2-1

- H2-1 Length

- Meta Robots 1

- Meta Robots 2

- Meta Refresh 1

- Canonical Link Element 2

- rel="next" 1 (laughs bitterly)

- rel="prev" 1 (keeps laughing bitterly)

- Size (bytes)

- Text Ratio

- % of Total

- Link Score

Again, this goes back to the goal of your audit. Keep the information that'll help you accomplish that objective and get rid of everything else.

Next, add two columns to your Content Pruning Master. Name the first one "All Users [Date Range]" and "Organic Users [Date Range]".

Hopefully you see where I'm going with this.

Unfortunately, we've run into a small roadblock. All the Screaming Frog URLs begin with "http://" or "https://", but our GA URLs begin with the root or subdomain. A normal VLOOKUP won't work.

Luckily, there's an easy fix. First, select cell A1, then choose "Insert > Column Right". Do this a few times so you have several empty columns between your URLs (in Column A) and the first row of data. Now you won't accidentally overwrite anything in this next step:

Highlight Column A, select "Data > Split text to columns", and then choose the last option, "Custom".

Enter two forward slashes.

Hit "Enter", and now you'll have the truncated URLs in Column B. Delete Column A, as well as the empty columns.

This is also a good time to get rid of any URLs with parameters. For instance, imagine Screaming Frog found your landing page, offers.hubspot.com/instagram-engagement-report. It also found the parameterized version of that URL: offers.hubspot.com/instagram-engagement-report?hubs_post-cta=blog-homepage

Or, perhaps you use a question mark for filters, such as "https://ift.tt/30t1Qg3".

According to GA, the latter URLs will get little organic traffic. You don't want to accidentally delete these pages because you're looking at the parameterized URL stats, versus the original one.

To solve this, run the same "split text to columns" process as before, but with the following symbols:

- #

- ?

- !

- &

- =

This will probably create some duplicates. You can either remove them with an add-on (no, Sheets doesn't offer deduping, which is a little crazy) or download your sheet to Excel, dedupe your data there, and then reupload to Sheets.

3. Evaluate pages with non-200 HTTP status codes.

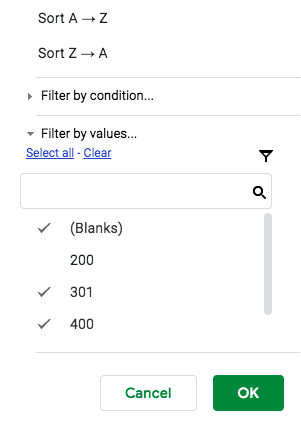

I recommend filtering the URLs that triggered a non-200 response and putting them into a separate sheet:

Here's what to investigate:

Redirect audit:

- How many redirects do you have?

- Are there any redirect chains (or multi-step redirects, which makes your page load time go up)?

- Do you have internal links to pages that are 301ing?

- Are any of your canonicalized pages 301ing? (This is bad because you don't want to indicate a page is the canonical version if it's redirecting to another page.)

404 error audit:

- Do you have internal links to pages that are 404ing?

- Can you redirect any broken links to relevant pages?

- Are any of your 404 errors caused by backlinks from mid- to high-authority websites? If so, consider reaching out to the site owner and asking them to fix the link.

4. Pull in traffic and backlink data.

Once you've standardized your URLs and removed all the broken and redirected links, pull in the traffic data from GA.

Add two columns to the right of Column A. Name them "All Traffic [Date Range]" and "Organic Traffic [Date Range]".

Use this formula for Column B:

=INDEX(‘All Traffic [Date Range]’!C:C,(MATCH(A2,’All Traffic [Date Range]’!A:D,0)))

My sheet was called All Traffic January-May 19, so here's what my formula looked like:

=INDEX('All Traffic January-May 19'!C:C,(MATCH(A2,'All Traffic January-May 19'!A:A,0)))

Use this formula for Column C:

=INDEX('Organic Traffic [Date Range]'!C:C,(MATCH(A2,'Organic Traffic [Date Range]'!A:A,0)))

Here was my formula:

=INDEX('Organic Traffic January-May 19'!C:C,(MATCH(A2,'Organic Traffic January-May 19'!A:A,0)))

Once you've added this, click the small box in the lower right-hand corner of cells B2 and C2 to extend the formulas to the entire columns.

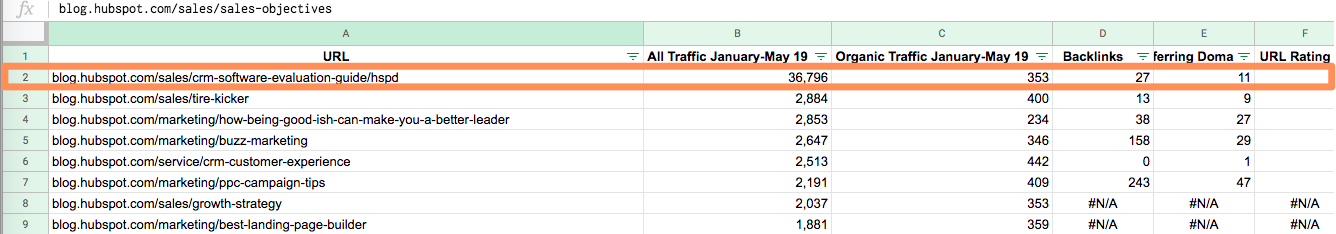

Next, for each URL we need backlinks and keywords by URL.

I used Ahrefs to get this, but feel free to use your tool of choice (SEMrush, Majestic, cognitiveSEO, etc.).

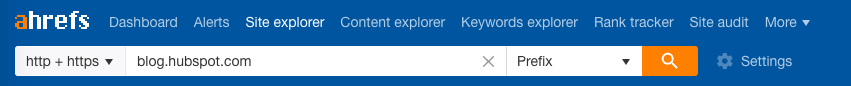

First, enter the root domain, subdomain, or subfolder you selected in step one.

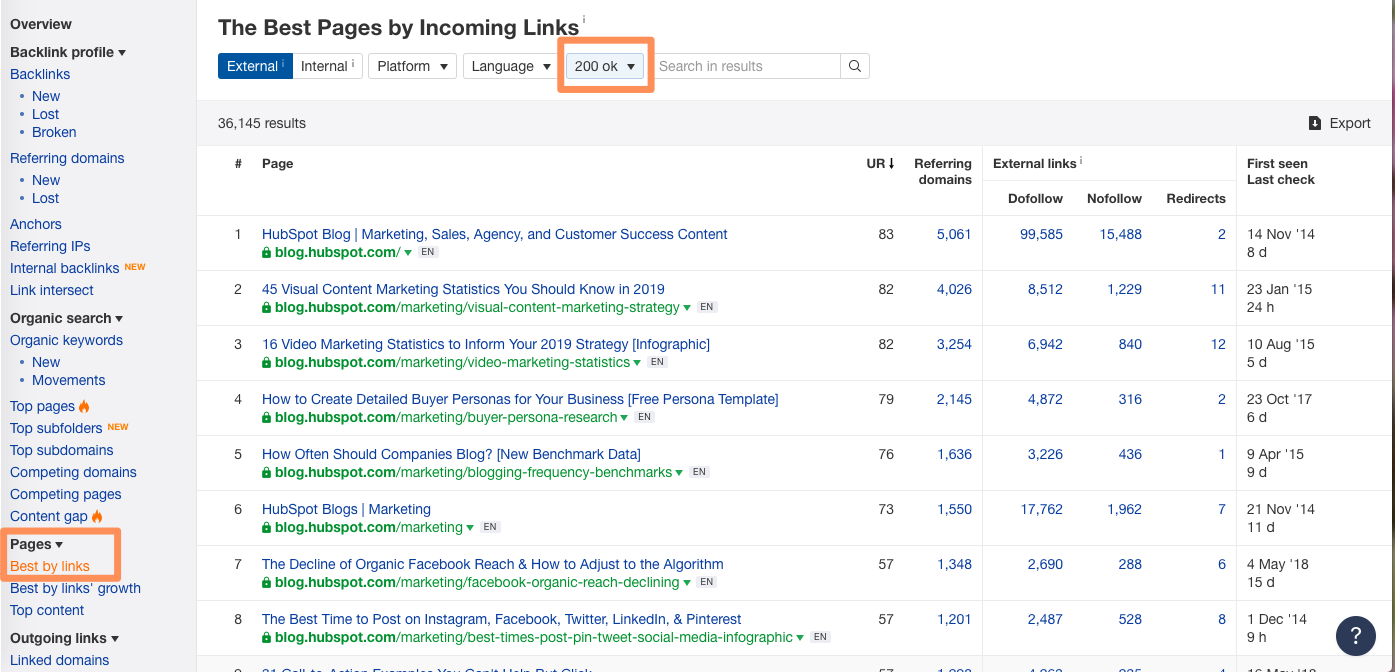

Then, select "Pages > Best by links" in the left-hand sidebar.

To filter your results, change the HTTP status code to "200" -- we only care about links to live pages.

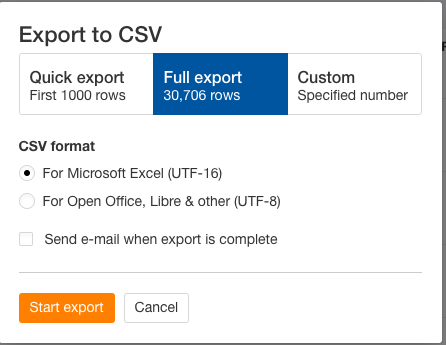

Click the Export icon on the right. Ahrefs will default to the first 1,000 results, but we want to see everything, so select "Full export".

While that's processing, add a sheet in your spreadsheet titled "Live Backlinks by URL". Then add three columns (D, E, and F) to the Content Pruning Master sheet named "Backlinks", "URL Rating", and "Referring Domains", respectively.

Import the Ahrefs CSV file into your spreadsheet. You'll need to repeat the "Split text to column" process to remove the transfer protocol (http:// and https://) from the URLs. You'll also need to delete Column A:

In Column D (Backlinks), use this formula:

=INDEX('Live Backlinks by URL'!E:E,(MATCH(A2,'Live Backlinks by URL'!B:B,0)))

In Column E (Referring Domains), use this formula:

=INDEX('Live Backlinks by URL'!D:D,(MATCH(A2,'Live Backlinks by URL'!B:B,0)))

In Column F (URL Rating), use this formula:

=INDEX('Live Backlinks by URL'!A:A,(MATCH(A2,'Live Backlinks by URL'!B:B,0)))

5. Evaluate each page using predefined performance criteria.

Now for every URL we can see:

- All the unique pageviews it received for the date range you've selected

- All the organic unique pageviews it received for that date range

- Its indexibility status

- How many backlinks it has

- How many unique domains are linking to it

- Its URL rating (e.g. its page authority)

- Its title

- Its title length

- Its canonical URL (whether it’s self-canonical or canonicalizes to a different URL)

- Its word count

- Its crawl depth

- How many internal links point to it

- How many unique internal links point to it

- How many outbound links it contains

- How many unique outbound links it contains

- How many of its outbound links are external

- Its response time

- The date it was last modified

- Which URL it redirects to, if applicable

This may seem like an overwhelming amount of information. However, when you're removing content, you want to have as much information as possible -- after all, once you've deleted or redirected a page, it's hard to go back. Having this data means you'll make the right calls.

Next, it's finally time to analyze your content.

Click the filter arrow on Column C ("Organic Traffic [Date Range]"), then choose "Condition: Less than" and enter a number.

I chose 450, which meant I'd see every page that had received less than 80 unique page views per month from search in the last five months. Adjust this number based on the amount of organic traffic your pages typically receive. Aim to filter out the top 80%.

Copy and paste the results into a new sheet titled "Lowest-Traffic Pages". (Don't forget to use "Paste Special > Values Only" so you don't lose the results of your formulas.) Add a filter to the top row.

Now, click the filter arrow on Column B ("All Traffic [Date Range]"), and choose "Sort: Z → A."

Are there any pages that received way more regular traffic than organic? I found several of these in my analysis; for instance, the first URL in my sheet is a blog page that gets thousands of views every week from paid social ads:

To ensure you don't redirect or delete any pages that get a significant amount of traffic from non-organic sources, remove everything above a certain number -- mine was 1,000, but again, tweak this to reflect your property's size.

There are three options for every page left:

- Delete

- Redirect

- Historically optimize (in other words, update and republish it)

Here's how to evaluate each post:

- Delete: If a page doesn't have any backlinks and the content isn't salvageable, remove it.

- Redirect: If a page has one or more backlinks and the content isn't salvageable, or there's a page that's ranking higher for the same set of keywords, redirect it to the most similar page.

- Historically optimize: If a page has one or more backlinks, there are a few obvious ways to improve the content (updating the copy, making it more comprehensive, adding new sections and removing irrelevant ones, etc.), and it's not competing with another page on your site, earmark it for historical optimization.

Depending on the page, factor in the other information you have.

For example, maybe a page has 15 backlinks and a URL rating of 19. The word count is 800 -- so it's not thin content -- and judging by its title, it covers a topic that's on-brand and relevant to your audience.

However, in the past six months it's gotten just 10 pageviews from organic.

If you look a bit more closely, you see its crawl depth is 4 (pretty far away from the homepage), it's only got one internal link, and it hasn't been modified in a year.

That means you could probably immediately improve this page's performance by making some minor updates, republishing it, moving it a few clicks closer to the homepage, and adding some internal links.

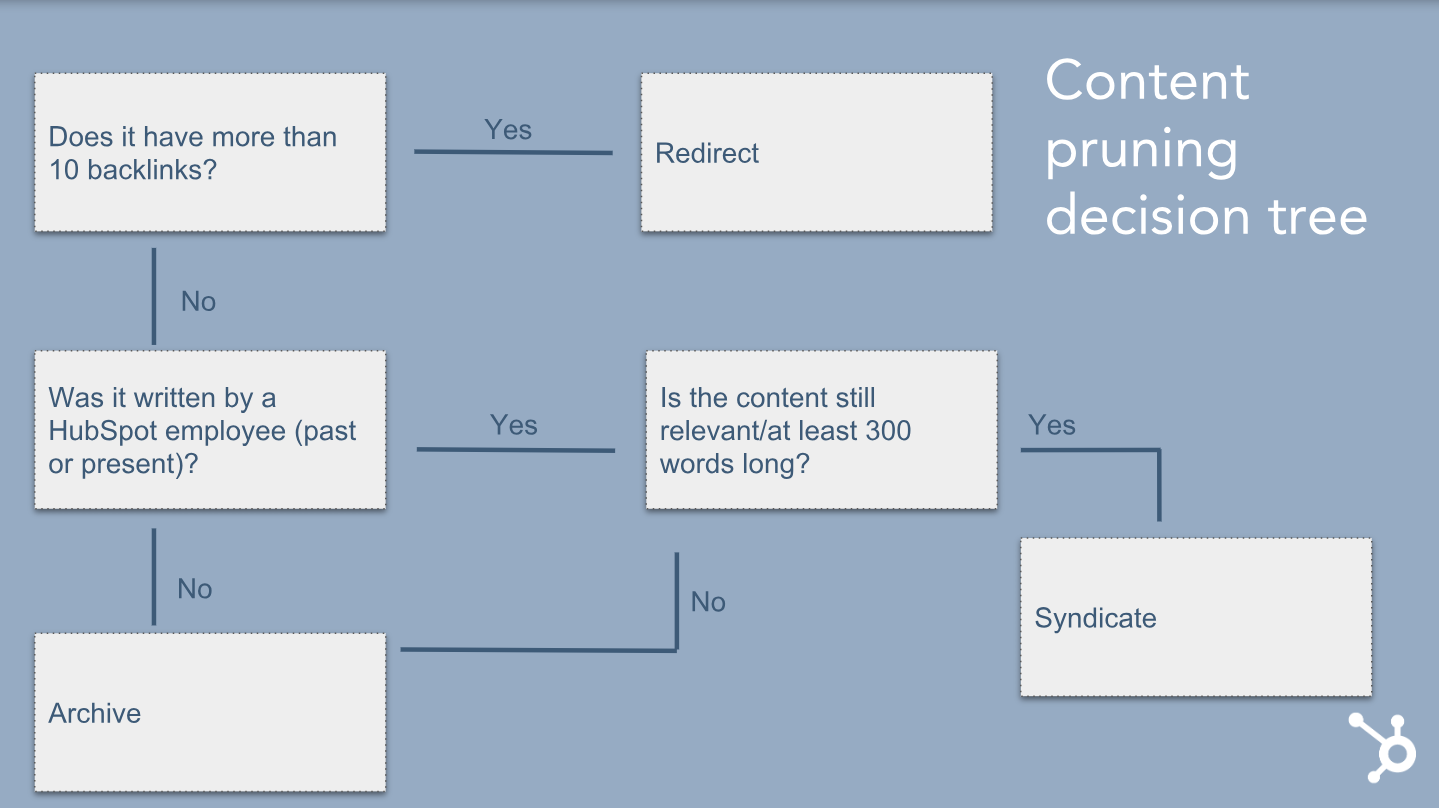

I recommend illustrating the parts of the process you'll use for every page with a decision tree, like this one:

You'll notice one major difference: instead of "historically optimize", our third option was "syndicate".

Publishing the articles we removed to external sites so we could build links was a brilliant idea from Matt Howells-Barby.

Irina Nica, who's the head of link-building on the HubSpot SEO team, is currently working with a team of freelancers to pitch the content we identified as syndication candidates to external sites. When they accept and publish the content, we get incredibly valuable backlinks to our product pages and blog posts.

To make sure we didn't run into any issues where guest contributors found a post they'd written several years ago for HubSpot on a different site, we made sure all syndication candidates came from current or former HubSpot employees.

If you have enough content, syndicating your "pruned" pages will reap you even more benefits from this project.

Speaking of "enough" content: as I mentioned earlier, I needed to go through this decision tree for 3,000+ URLs.

There isn't enough mindless TV in the world to get me through a task that big.

Here's how I'd think about the scope:

- 500 URLs or fewer: evaluate them manually. Expense that month's Netflix subscription fee.

- 500-plus URLs: evaluate the top 500 URLs manually and hire a freelance or VA to review the rest.

No matter what, you should look at the URLs with the most backlinks yourself. Some of the pages that qualify for pruning based on low traffic may have hundreds of backlinks.

You need to be extra careful with these redirects; if you redirect a blog post on, say, "Facebook Ads Best Policies" to one about YouTube Marketing, the authority from the backlinks to the former won't pass over to the latter because the content is so different.

HubSpot's historical optimization expert Braden Becker and I looked at every page with 60+ backlinks (which turned out to be roughly 350 pages) and manually tagged each as "Archive", "Redirect", or "Syndicate." Then, I hired a freelancer to review the remaining 2,650.

Once you've tagged all the posts in your spreadsheet, you'll need to go through and actually archive, redirect, or update each one.

Because we were dealing with so many, our developer Taylor Swyter created a script that would automatically archive or redirect every URL. He also created a script that would remove internal links from HubSpot content to the posts we were removing. The last thing we wanted was a huge spike in broken links on the blog.

If you're doing this by hand, remember to change any internal links going to the pages you're removing.

I also recommend doing this in stages. Archive a batch of posts, wait a week and monitor your traffic, archive the next batch, wait a week and monitor your traffic, etc. The same concept applies with redirects: batch them out instead of redirecting a ton of posts all at once.

To remove outdated content from Google, go to the URLs removal page of the old Search Console, and then follow the steps listed above.

This option is temporary -- to remove old content permanently, you must delete (404) or redirect (301) the source page.

Also, this won't work unless you're the verified property owner of the site for the URL you're submitting. Follow these instructions to request removal of an outdated/archived page you don't own.

Our Results

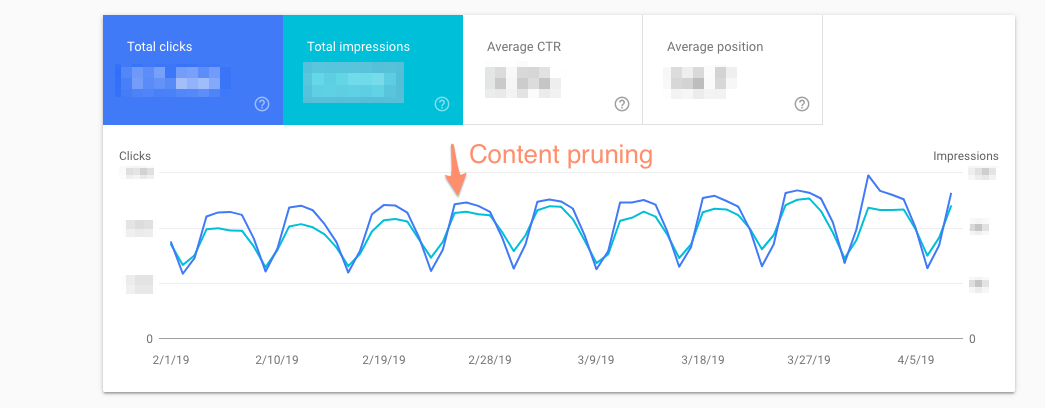

So, what happened after we deleted those 3,000 blog posts?

First, we saw our traffic go up and to the right:

It's worth pointing out content pruning is definitely not the sole cause of growth: it's one of many things we're doing right, like publishing new content, optimizing existing content, pushing technical fixes, etc.

Our crawl budget has been significantly impacted -- way above Victor's expectations, in fact.

Here's his plain-English version of the results:

"As of two weeks ago, we're able to submit content, get it indexed, and start driving traffic from Google search in just a matter of minutes or an hour. For context, indexation often takes hours and days for the average website."

And the technical one:

"We saw a 20% decrease in crawls, but 38% decrease in the number of URIs crawled, which can partially be explained by the huge drop in JS crawls (50%!) and CSS crawls (36%!) from pruning. When URIs crawled decreases greater than the total number of crawls, existing URI's and their corresponding images, JS, and CSS files are being 'understood' by GoogleBot better in the crawl stage of technical SEO."

Additionally, Irina built hundreds of links using content from the pruning.

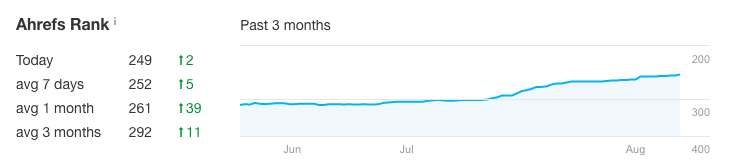

Finally, our Ahrefs rank moved up steadily -- we're now sitting at 249, which means there are only 248 websites in the Ahrefs database with stronger backlink profiles.

Ultimately, this isn't necessarily an easy task, but the rewards you'll reap are undeniably worth the hassle. By cleaning up your site, you're able to boost your SEO rankings on high-performing pages, while ensuring your readers are only finding your best content, not a random event page from 2014. A win, win.

from Marketing https://ift.tt/2IeZrPL

via

No comments:

Post a Comment